Testing Asynchronous APIs with Postman

I want to tell you a little story about how we enabled a client of ours to perform end-to-end testing on their multi-service architecture using Postman.

The client is a broker and lender of car finance. Their business model involves taking credit applications that have been submitted online by car dealerships around the UK. The application is then processed via a chain of several asynchronous SOAP based web services - before a decision is given to the customer in the dealership.

This project was part of a drive to increase the speed at which credit decisions are made. The client wanted to add a third party credit rules / decision engine to assist in lowering the decision turn-around time. The project mainly involved altering their systems to make HTTP calls to the aforementioned external credit rules engine which, based on the credit application, would suggest a list of suitable backers for the deal.

Changing the flow of information to accommodate this external rules engine was straightforward, it is, after all just another HTTP request. All of the message communication between the client and the rules engine in this system was orchestrated in an Enterprise Serial Bus (ESB). This meant I simply pushed data into the client system via the web application as a dealership would. Then waited for the credit decision to propagate through to the Windows Desktop Client application used by the underwriters in the office who would give the final thumbs up.

When it came to end-to-end testing this new integration, this presented a problem. When dipping one’s toe in the waters of automated credit decisions, you want to be confident your systems aren’t just handing money out freely. The client needed a high-level of test coverage to ensure confidence in this new system to be certain there had not been any regressions introduced as a result of the development.

The client’s team began by manually keying finance applications via the dealership system trying different combinations of applicants and credit. As you can imagine; this kind of manual testing doesn’t scale. It’s repetitive and boring for those involved. This kind of problem requires a large amount of test coverage across a range of different scenarios and is extremely prone to error.

I began looking at where in the flow of information between dealer and decision a “testing seam” could be added. Somewhere that I could hook automation into that would give enough insight into what the credit rules engine had decided for the application. The dealership system was backed by a SOAP web service for submitting finance applications so data could be submitted in an automated way, we just couldn’t get it out the other end with ease — Yet.

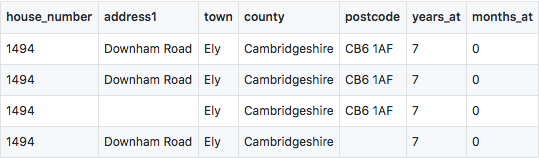

I tasked the tester with creating a series of test data sheets in Excel with all the fields needed for a finance application and variations thereof. The intention was to write some code which integrated with the SOAP endpoints. We also asked him to include the expect outcome of the application — Accepted, Referral or Decline as a column in the data sheet.

At the same time as doing this, a member of the client development team discovered a seam and hit upon an idea to build a simple API which reached into the database of the Windows Client application used by the Underwriters. Using a simple REST framework, the team and I quickly threw together an API. The output of the API was essentially debug information passed into, then stored by the Windows Client. This contained all the vital information needed for verifying the end of the chain: The finance decision and whether a backer would accept the application.

The team and I now had the means to put together a full end-to-end test of the service chain. After some exploration, using Postman the idea of custom code was dropped. I crafted a series of endpoints which would submit, verify, wait and finally assert that all steps in the chain were executing as expected.

Postman is a fantastic tool. Together we were able to use one collection in a combination with the suite of iteration data prepared by the tester to push through multiple proposals, automatically asserting the decision at the final step of the process. All executed manually via the Postman Collection Runner.

The Collection workflow was roughly as follows:

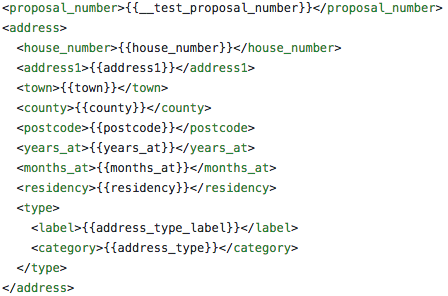

- Prepare data sheet variables for an initial request and populate all variable placeholders.

2. Submit credit application to the initial dealership service.

3. Verify the request was successful and parse the response using cheerio (pre-loaded in Postman) storing the returned proposalId in an environment variable to be re-used throughout the run.

4. Immediately execute a GET request to confirm the credit application was successfully processed and stored in it’s intended form at the entry point to the service chain. Essentially, read back what had just been pushed in.

5. Wait a few seconds for the data to flow through the system via the ESB. We used: setTimeout(function(){}, 5000);

You could also submit a request to https://postman-echo.com/delay/5.

6. Make a request to the debug endpoint to grab the final credit decision. Assert the values in the response match that of the initial data sheet (note expectedDecision = data.decision — this comes from the Iteration Data).

This workflow meant that extending any test coverage was simply a matter of adding additional scenarios to the test data originally generated in Excel.

This meant the client could now execute the initial forty plus tests on-demand without the overhead of having people key in the applications and verify the results manually.

In addition, by duplicating the Postman Collection, I was able to write different assertions against the response from the debug endpoint. This allowed us to push through a bulk data sheet of applications we expected should fail and assert they did.

Continuous Testing

Many of these SOAP services discussed are internal to my client, so using a Postman Monitor for these testing collections was not an option. In addition, many of the Collections required data input, which is, as of the time of writing — Not supported by monitors.

To work around this constraint I suggested and wrote a job for our Continuous Integration server (Jenkins) which runs on a timer and essentially does a similar job to a Monitor.

The job is broken down as follows:

- Checkout data sheets from source control

- Execute a curl request to the Postman API to download the JSON for the Collection and Environment files (I’ve stored the UUID’s locally in the build for now).

- Install Newman via npm

- Run the Newman CLI with the options set to the locally downloaded collection and environment plus the iteration data which I checked into source control. A variation of the script is show below:

This has been of real benefit to this particular client, giving their testing efforts a much needed boost by providing them with the ability to quickly assert their systems are operational and make sure they’re not throwing away cash!

For me personally, this was a great experience. The more I discover about Postman, the more I love it. Postman has become such an essential part of my workflow when working in any service based environment. It’s so much more than a nice REST Client! I’ve also enjoyed the challenge of taking a multi-service architecture and testing it thoroughly, end-to-end.

If you’ve found this at all insightful and want to know more, I would love to hear from you. You can find us on Twitter and Facebook or simply leave a comment.

Get in touch!

We're waiting to help you, so please drop us a line!